GPU Mining on Proxmox for Fun and Profit

I've got two machines running HiveOS at the moment, and a third machine that has a GPU but isn't yet mining.

By the end of this post I hope to have at least one mining Ethereum via HiveOS, running in VMs on Proxmox. This will allow me to put the hosts into Proxmox as compute nodes, giving me a bunch of compute "for free" without interrupting their mining.

Before you say it - yes, this is a bit late, given Ethereum is about to go proof-of-stake, but that's why I'm doing it - I want to be able to rapidly change to non-Ethereum workloads, including non-mining activities, when the time comes.

Preparation

Preparing the host

The first port of call is the Proxmox wiki.

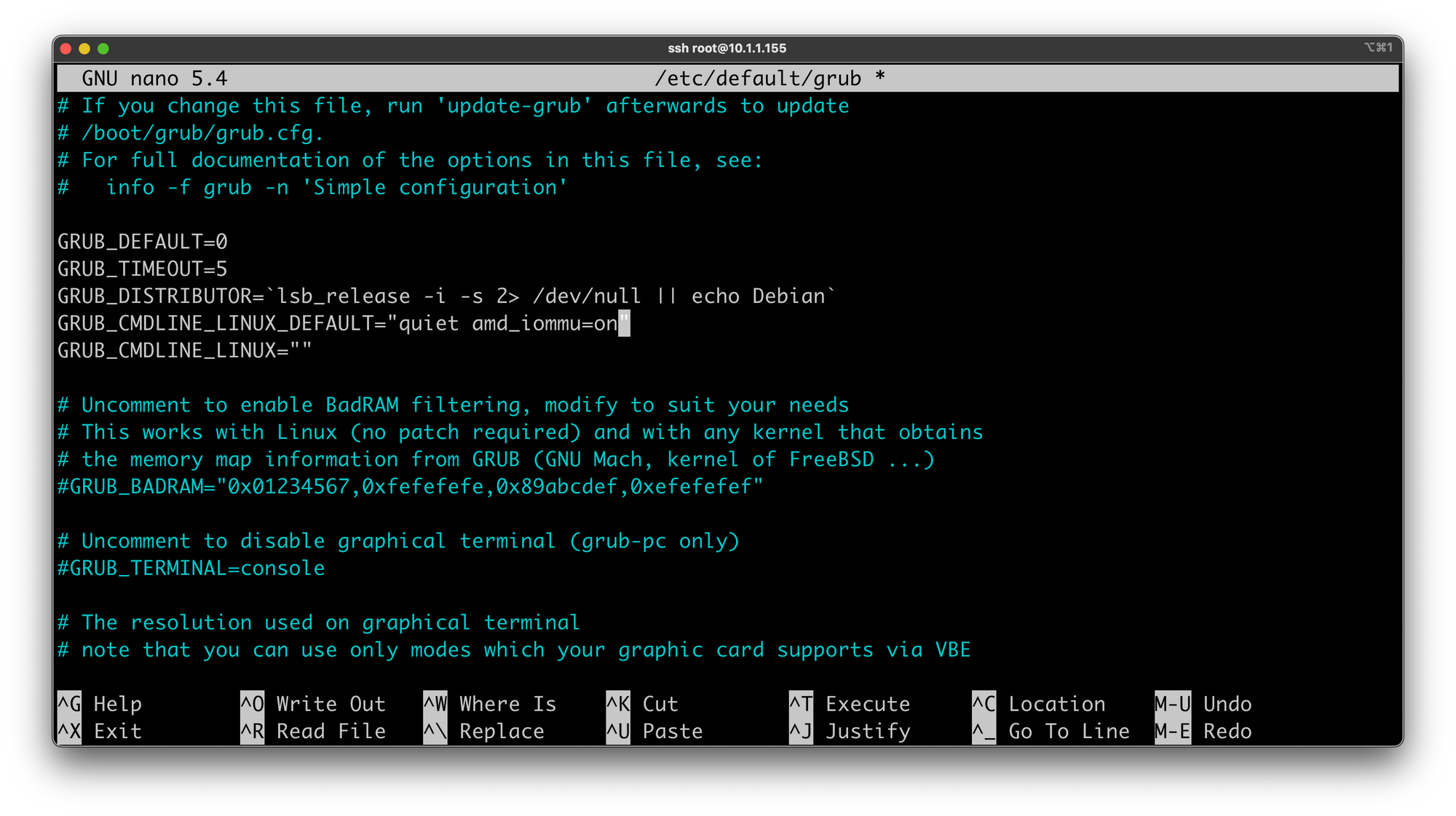

We'll test things out on Charmeleon, the tiny Fractal Define 302 box I just got, and its Radeon RX6800 GPU, before migrating the other two machines. A quick nano /etc/default/grub and we're off.

Then we update GRUB:

root@charmeleon:~# update-grub

Generating grub configuration file ...

Found linux image: /boot/vmlinuz-5.13.19-2-pve

Found initrd image: /boot/initrd.img-5.13.19-2-pve

Found memtest86+ image: /boot/memtest86+.bin

Found memtest86+ multiboot image: /boot/memtest86+_multiboot.bin

Adding boot menu entry for EFI firmware configuration

doneAfter a reboot, we check if the IOMMU is enabled:

root@charmeleon:~# dmesg | grep -e DMAR -e IOMMU

[ 0.278845] pci 0000:00:00.2: AMD-Vi: IOMMU performance counters supported

[ 0.283959] pci 0000:00:00.2: AMD-Vi: Found IOMMU cap 0x40

[ 0.380930] perf/amd_iommu: Detected AMD IOMMU #0 (2 banks, 4 counters/bank).

[ 6.573086] AMD-Vi: AMD IOMMUv2 driver by Joerg Roedel <jroedel@suse.de>Then we add the following lines to /etc/modules

vfio

vfio_iommu_type1

vfio_pci

vfio_virqfdAnd check if IOMMU remapping is possible:

root@charmeleon:~# dmesg | grep 'remapping'

[ 0.283971] AMD-Vi: Interrupt remapping enabledRunning find /sys/kernel/iommu_groups/ -type l shows multiple separate IOMMU groups - looks good so far!

Getting further into the article, we run across our first hint that things might not go smoothly...

AMD Navi (5xxx(XT)/6xxx(XT)) suffer from the reset bug (see https://github.com/gnif/vendor-reset), and while dedicated users have managed to get them to run, they require a lot more effort and will probably not work entirely stable

😬

Preparing a HiveOS VM

Grab the HiveOS image:

root@charmeleon:~# wget https://download.hiveos.farm/history/hiveos-0.6-217-stable@220423.img.xz

--2022-05-01 09:42:04-- https://download.hiveos.farm/history/hiveos-0.6-217-stable@220423.img.xz

Resolving download.hiveos.farm (download.hiveos.farm)... 104.22.11.47, 104.22.10.47, 172.67.28.84, ...

Connecting to download.hiveos.farm (download.hiveos.farm)|104.22.11.47|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 1138821344 (1.1G) [application/octet-stream]

Saving to: ‘hiveos-0.6-217-stable@220423.img.xz’

hiveos-0.6-217-stable@22042 100%[=========================================>] 1.06G 10.9MB/s in 1m 51s

2022-05-01 09:43:56 (9.82 MB/s) - ‘hiveos-0.6-217-stable@220423.img.xz’ saved [1138821344/1138821344]

root@charmeleon:~# unxz hiveos-0.6-217-stable@220423.img.xzDownloading and extracting HiveOS.

We create a new vm, rx6800, with 4GiB of RAM, 4 CPU cores and 20GiB of disk - and grab its VMID - 114.

Then we import the HiveOS disk:

root@charmeleon:~# qm importdisk 114 hiveos-0.6-217-stable@220423.img local-lvm

importing disk 'hiveos-0.6-217-stable@220423.img' to VM 114 ...

Logical volume "vm-114-disk-1" created.

transferred 0.0 B of 7.0 GiB (0.00%)

transferred 72.0 MiB of 7.0 GiB (1.00%)

transferred 144.7 MiB of 7.0 GiB (2.01%)

transferred 218.2 MiB of 7.0 GiB (3.03%)

...

transferred 7.0 GiB of 7.0 GiB (99.19%)

transferred 7.0 GiB of 7.0 GiB (100.00%)

transferred 7.0 GiB of 7.0 GiB (100.00%)

Successfully imported disk as 'unused1:local-lvm:vm-114-disk-1'Importing the HiveOS image.

And add it to our machine in Proxmox by double-clicking it.

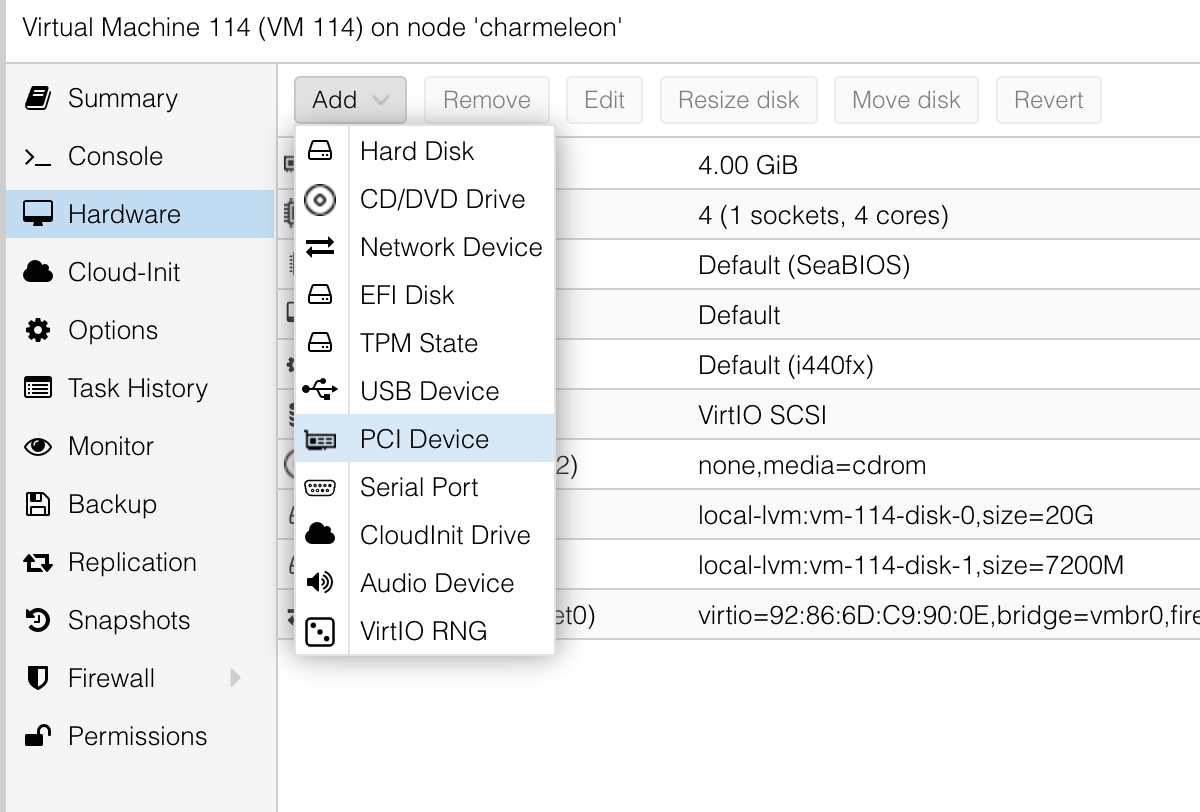

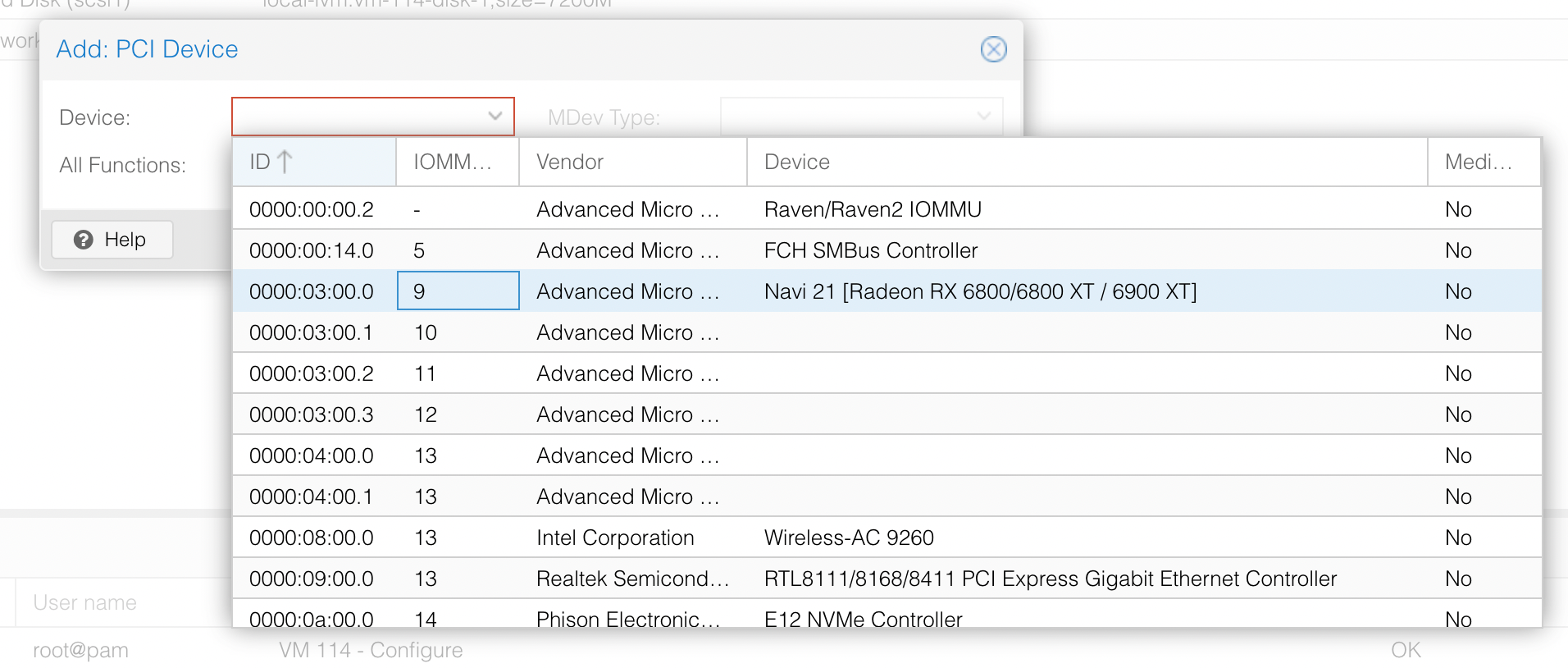

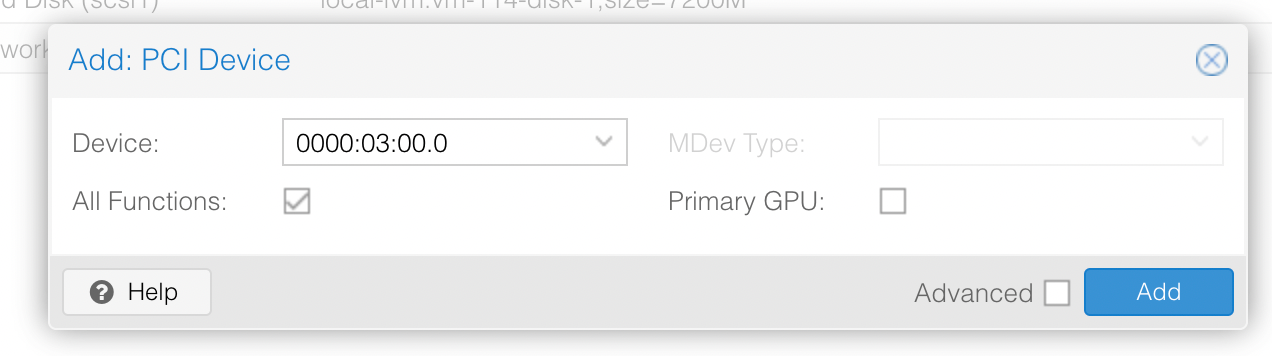

Now it's time to add our GPU.

We're not quite there, still some work to do on the host.

# Find my GPU

root@charmeleon:~# lspci

...

03:00.0 VGA compatible controller: Advanced Micro Devices, Inc. [AMD/ATI] Navi 21 [Radeon RX 6800/6800 XT / 6900 XT] (rev c3)

...

# List related devices

root@charmeleon:~# lspci -n -s 03:00.0

03:00.0 0300: 1002:73bf (rev c3)

# Load VFIO-PCI for my GPU

echo "options vfio-pci ids=1002:73bf disable_vga=1" > /etc/modprobe.d/vfio.conf

# Blacklist drivers

echo "blacklist radeon" >> /etc/modprobe.d/blacklist.conf

echo "blacklist nouveau" >> /etc/modprobe.d/blacklist.conf

echo "blacklist nvidia" >> /etc/modprobe.d/blacklist.conf

A quick reboot later, and we're almost ready to rock.

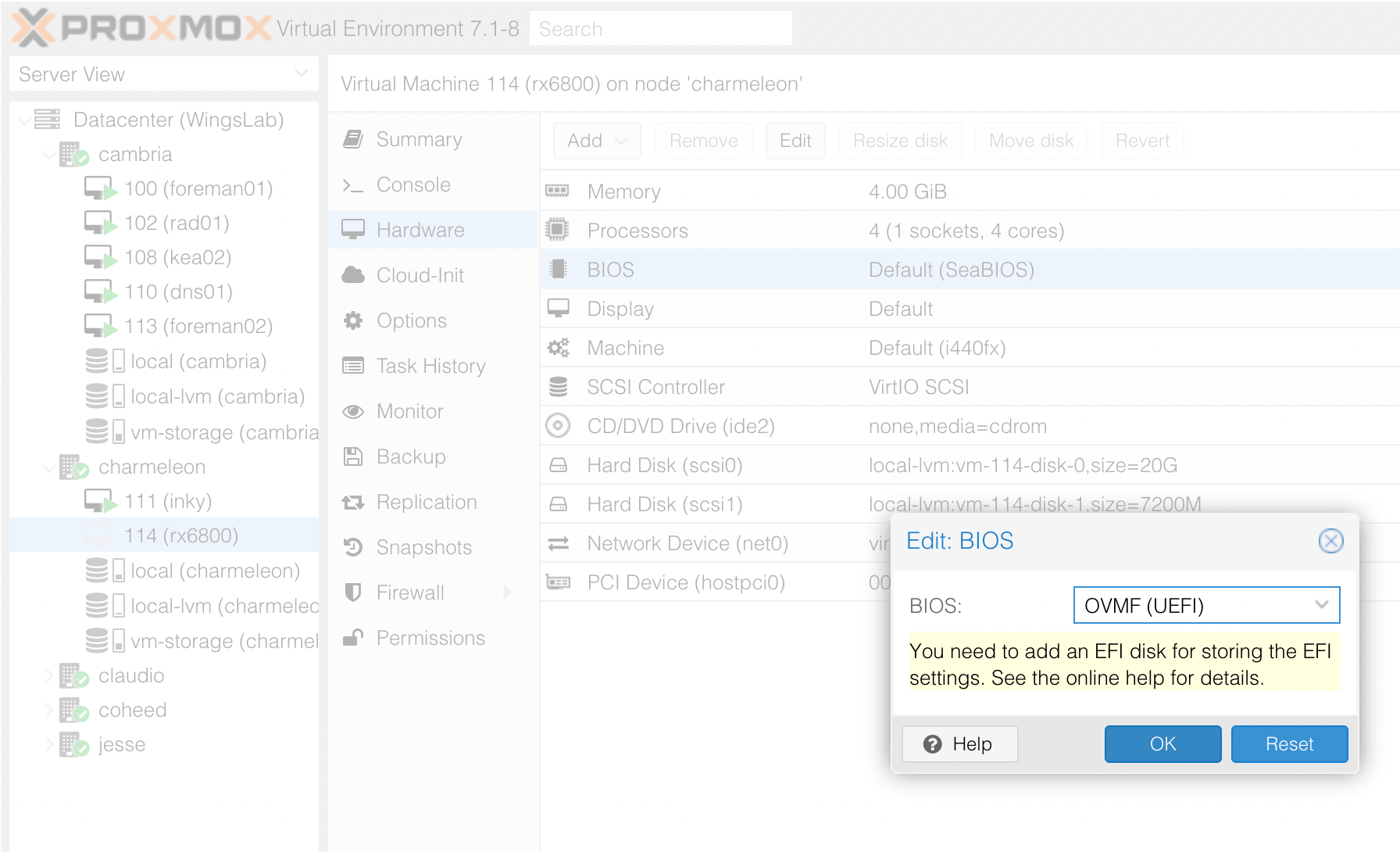

We reconfigure the VM with the OVMF UEFI BIOS:

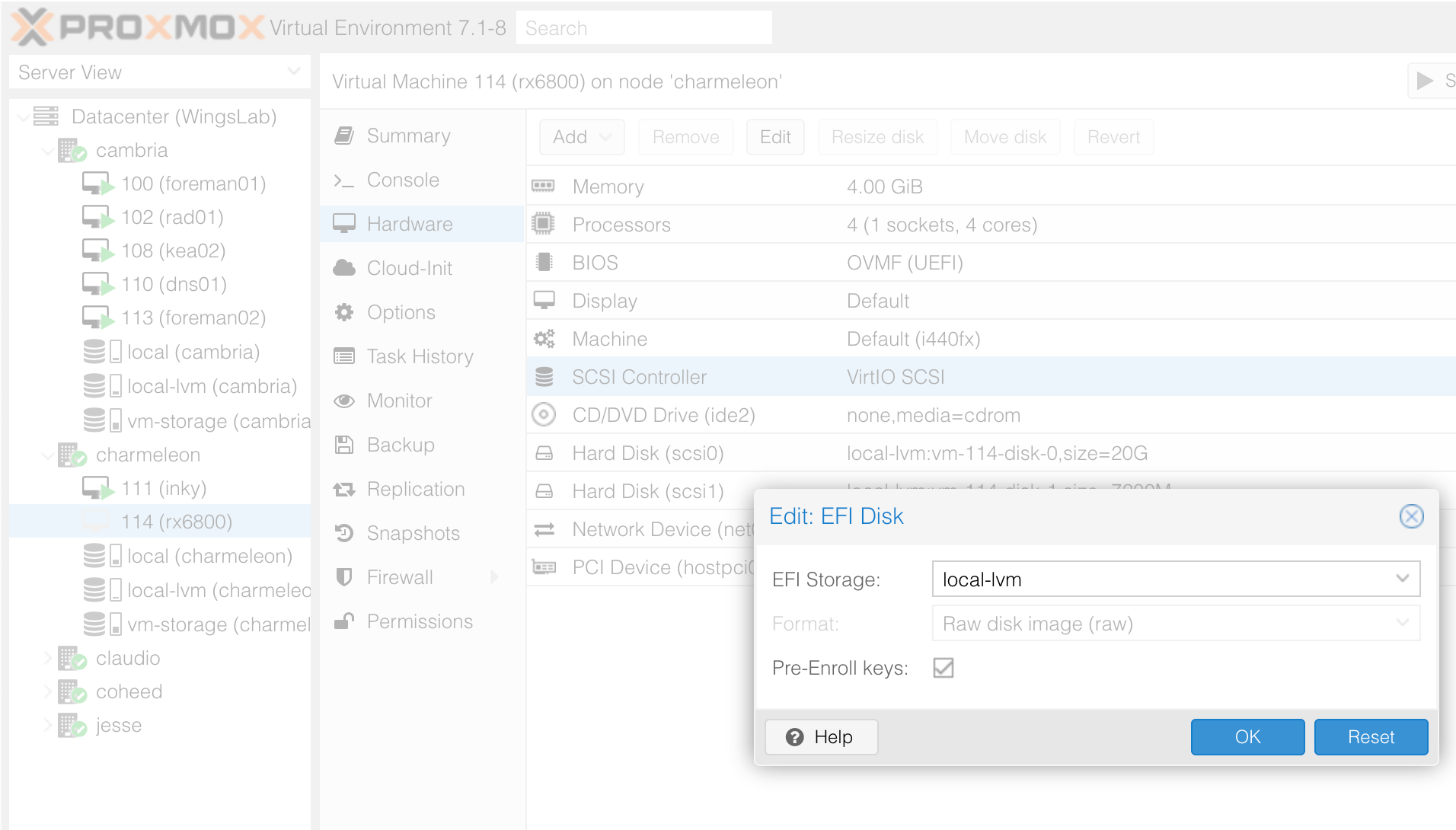

And add an EFI disk as instructed.

Starting the machine, it... didn't work. That's becoming a theme with this blog!

Changed machine type to q35, and enabled PCI-e... and it still didn't work. 🥺

Time to look around a bit.

One GRUB_CMDLINE_LINUX_DEFAULT="quiet iommu=pt amd_iommu=on video=efifb:off" in /etc/default/grub.cfg, update-grub, and update-initramfs -u later...

Grab some IDs:

root@charmeleon:~# lspci -n -s 01:00

01:00.0 0604: 1002:1478 (rev c3)

root@charmeleon:~# lspci -n -s 02:00

02:00.0 0604: 1002:1479

root@charmeleon:~# lspci -n -s 03:00

03:00.0 0300: 1002:73bf (rev c3)

03:00.1 0403: 1002:ab28

03:00.2 0c03: 1002:73a6

03:00.3 0c80: 1002:73a4And then edit vfio.conf.

root@charmeleon:~# echo options vfio-pci ids=1002:1478,1002:1479,1002:73bf,1002:ab28,1002:73a6,1002:73a4 disable_vga=1 > /etc/modprobe.d/vfio.confQuite a few more options than last time.

I also blacklisted the "amdgpu" kernel module:

echo "blacklist amdgpu" >> /etc/modprobe.d/blacklist.conf

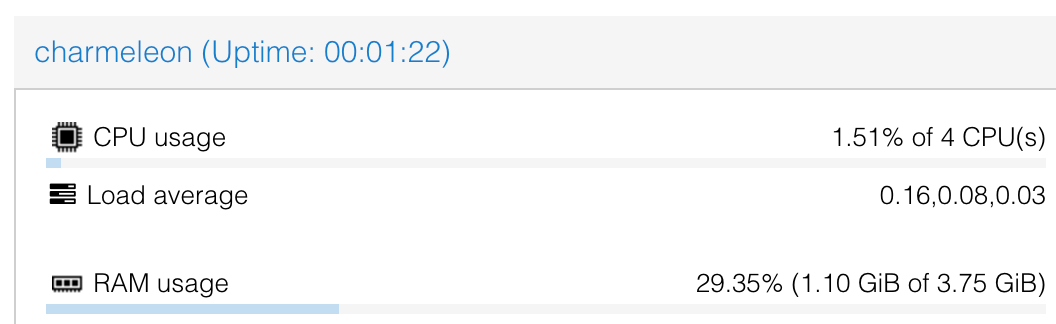

Some poking later, I discovered my host machine only has 4GiB of RAM, and I'm trying to allocate all of it.

Changing the VM to use 2GiB instead, and booting it again, we get a working machine! Unfortunately it still isn't booting into HiveOS. I'm going to try a slightly different tactic - burning a USB drive with HiveOS on it, adding it to the VM and booting it.

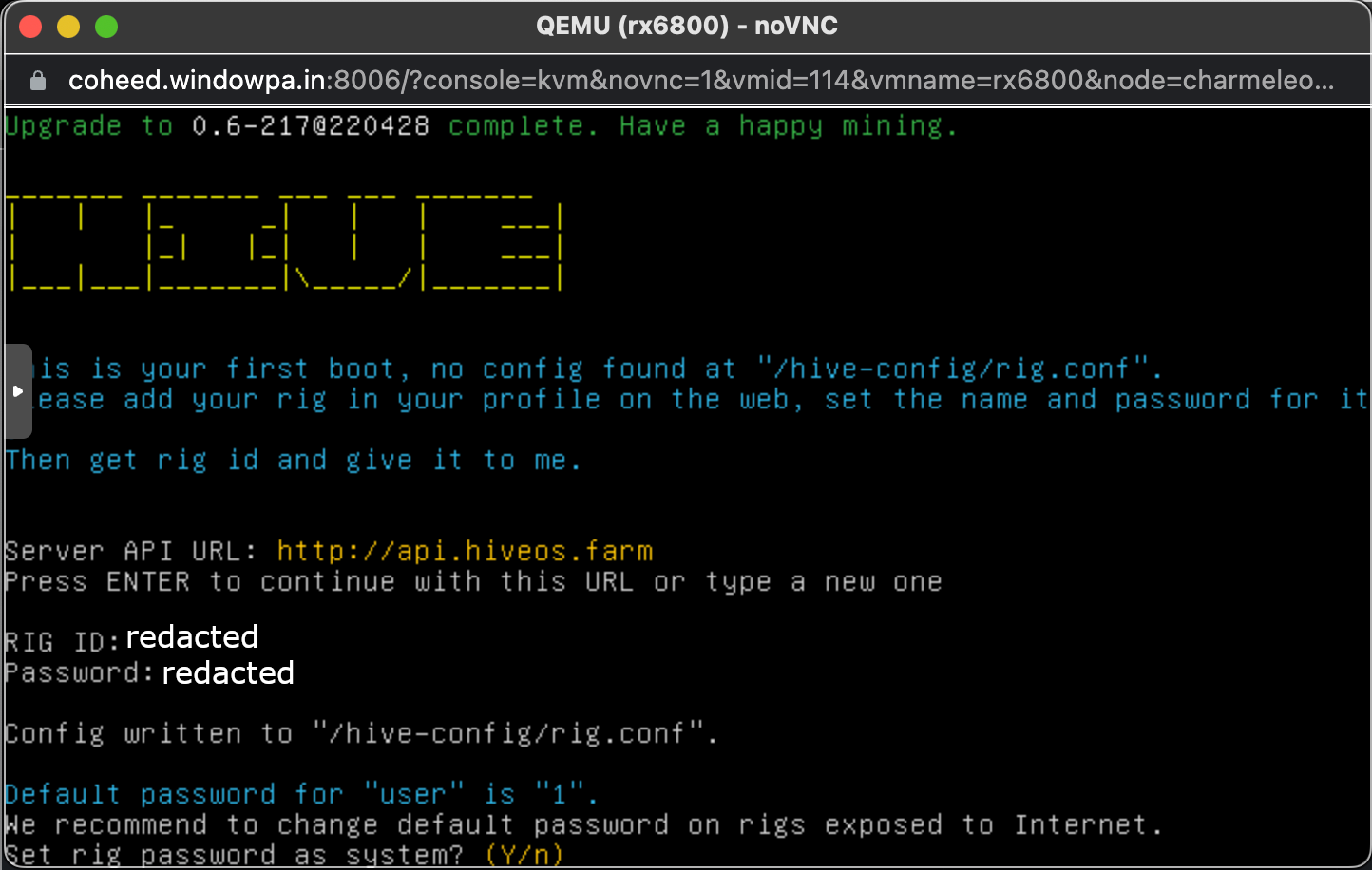

After changing to SeaBIOS, I successfully booted into HiveOS and it detected a GPU! However, I had no networking. Drat. I tried a few different network cards and settled on Realtek RTL8139 as that seemed to work.

Having settled on SeaBIOS as a solution, I went to try running with the internal/imported disk image again. It works! Nothing looks too different, but I can theoretically unplug my USB drive - and it should also be more reliable on real storage, as HiveOS' logging tends to destroy USB drives. Note: I did have to change the disk from VirtIO SCSI to SATA for HiveOS to be able to see its own boot disk.

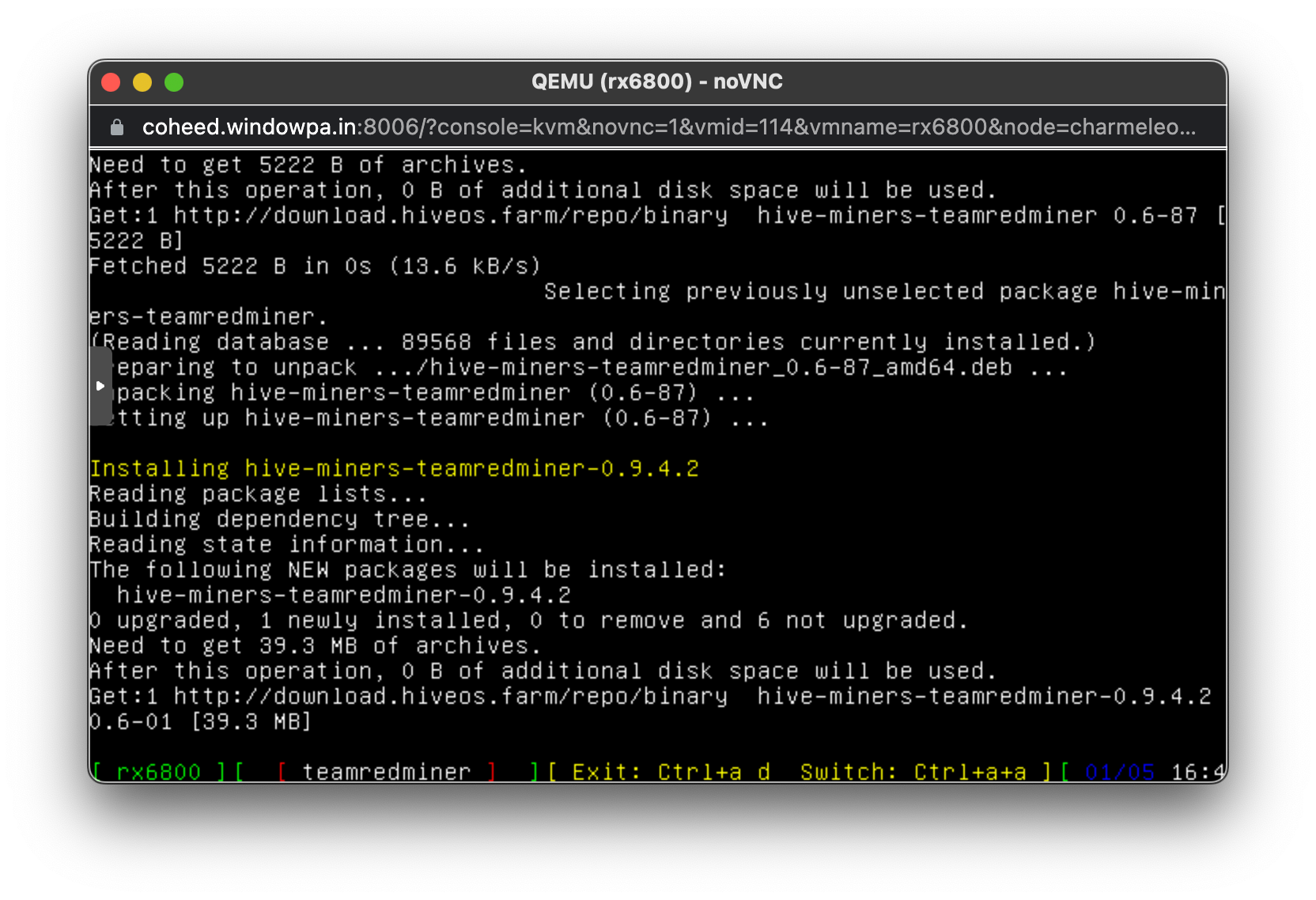

The GPU shows up and everything appears fine - but it won't mine. I quickly realised this is because the main "flight sheet" applied to the miner was set to use NVIDIA-only miners. Made a new flight sheet based on TeamRedMiner, and...

Bam! 61MH/s on Ethereum, and an instantly overheating GPU.

Huh. Updated HiveOS and rebooted, and tried again... this time, hit a stable 62MH/s (64MH/s is the theoretical sweet spot for this card) and the fans kicked in and kept it going.

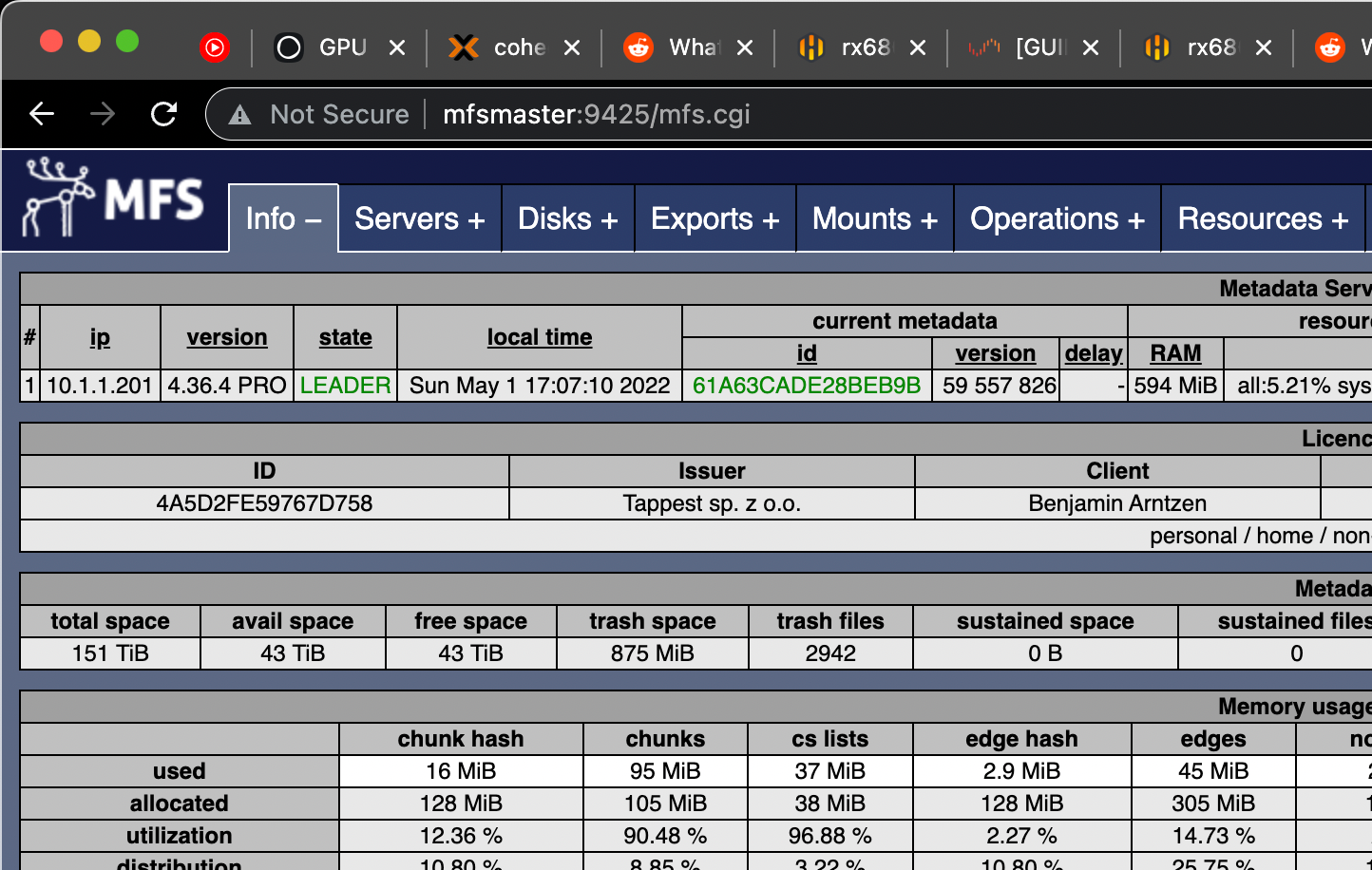

Overall I'm really happy to have another machine in Proxmox, though with the limited RAM it's not a lot of use. In any case, I also have a new MooseFS server, bringing me to...

A total of 151TiB of disk space, finally enough to use my full MooseFS Pro licence.

I'll likely convert my other two rigs over to HiveOS-on-Proxmox over the next few days and update this post with anything interesting I find in the process.